This article began as an attempt to document some of the many development projects of a knowledge graph for running the MSP I was partnered at. I have since exited the parnership - and wanted to share some of the results of my knowledge graph project with others.

In 2011 I merged my IT consultancy business with a relatively new MSP startup.

I was responsible for the service delivery side of our business. We sold several (too many IMHO) customizable managed user service plans, as well as hardware, software, consulting and integration services. Add to that private cloud hosting, and by 2016 we were starting to add public cloud (Office365 and later Azure)

This presented several challenges around tracking and accuracy:

• Do all the right users had all the right services provisioned

• Are all the endpoints and servers added to RMM, and AV protected (with OS patching)

• Are we billing for the right number of users/services/plans

• Have we de-provisioned and offboarded services for terminated users

• Are all mailboxes, endpoints and servers backed up? Are they even IN the backup system?

• Did all critical infrastructure make it into our monitoring and NOC?

I knew that to execute a truly comprehensive solution to this problem you ultimately need to be able to easily answer 3 pretty basic questions:

Given a client, show me ALL THE USERS/DEVICES under plan, and a list (or better yet - create a ticket) for any services that are UNDERPROVISIONED or OVERPROVISIONED so that we can correct them.

Have any NEW users/devices shown up, and are they ALSO supposed to be on-plan?

Has the client TERMINATED any users/devices, and should they be REMOVED from service?

Sounds simple enough right? The problem was very quickly apparent.

We don't have a single system that can answer those 3 questions.

To build a shared understanding, you would need a list of WHAT YOU ARE DELIVERING and WHAT IS EXEMPT so there could be collaboration between your service director, account manager, and client.

Even if you had a fully integrated PSA/RMM tool (which we didn't) would that system ALSO check to see that 3rd party services were properly provisioned? (Office, spam filter, backup systems, etc.) - seems unlikely. Would that system even know if a particular device/user was subject to service add-ons, or exemptions?

Our systems knew the client had a contract, but it doesn't know WHICH users/devices were subscribed to the support plan, and which were exempt.

We knew how our service bundles were built (User received Office365 license, patch management, antivirus, email backup, infrastructure monitoring, and service desk support) but there was no easy way to ask - ARE all those services ACTUALLY provisioned. It basically meant a lot of manual reporting, and by the time you completed an audit for one client of ALL THOSE SERVICES, it was already out-of-date, and the client likely has added/removed users or changed a-la-carte upgrades/downgrades.

Up to this point we had primarily relied on storing data within our clients' active directory to track users that were part of a plan. We marked exempt those accounts that weren't plan users. Also users group membership helped us track licensing/subscription (we were doing some SPLA/multi-tenant hosting as well).

A script ran monthly that queried the clients directory, assembled an Excel billing/usage report, and uploaded it to us.

This helped us improve tracking and billing accuracy. We could also compare usage vs what we were billed from our vendors/distribution. This helped, but it didn't provide the ability to verify service implementation. For that we still relied on our implementation SOPs. If something got missed a manual audit would be required to discovered the problem. If an audit wasn't performed, we had to hope someone noticed (hopefully our employee, but often enough that could be our client). Not ideal!

An interim solution was exporting lists of users/devices from our RMM discovery tool, and then using that to import back into our asset management/PSA/CRM systems. That DID help make for an more accurate auditing process. But didn't dramatically improve the dynamic service trackability we were after. It also did nothing to dynamically (or automatically) verify proper implementation.

At the end of 2017 we merged with another organization, and one of the principals joining us had extensive SQL Server development experience. I had SQL experience myself, but it was primarily from the support/infrastructure/best practices side, And I really didn't have any experience with database development or table architecture/design.

I thought this would be the perfect solution! We could just build a data warehouse in SQL server to answer all these questions!

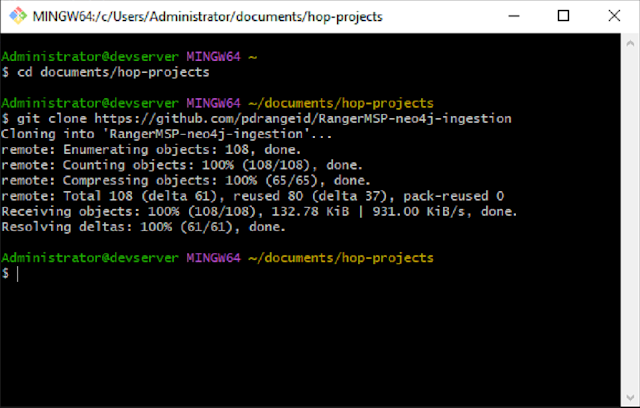

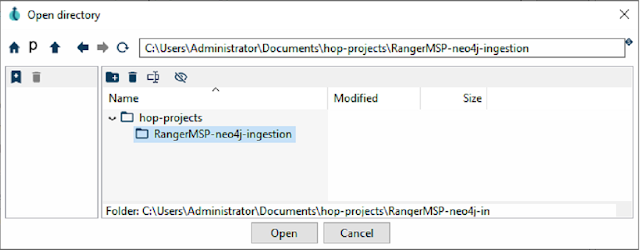

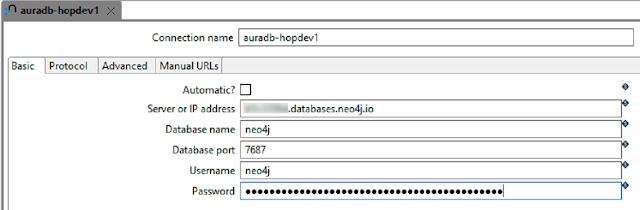

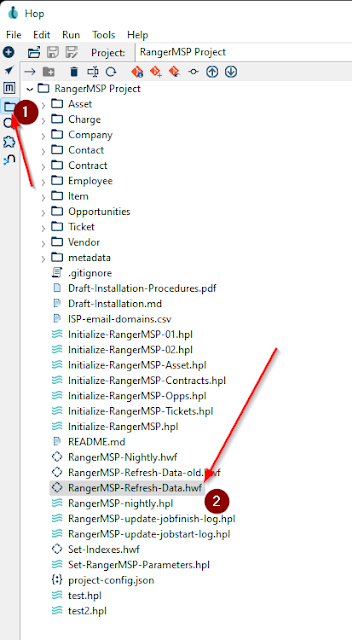

At the time we were using RangerMSP fka CommitCRM (PSA/ITAM/CRM), Managed Workplace for RMM (patching/AV/automation), and CheckMK (a Nagios based NOC monitoring system).

So away we went - ingested data from all 3 systems into a SQL database, and then began writing some queries that would JOIN views together so we could discover discrepancies.

I almost immediately saw this was not going to work. Just to query those 3 platforms required complex JOINs. I knew a little TSQL, but I was heavily reliant on our SQL expert to design the database, and write the stored procedures and views.

I knew the relationships between users/devices/services/tickets very well. My database expert was relatively new to managed services, so was looking to be to provide the layout of the data. Unfortunately the joins were so complicated that I knew I was going to be unable to assist in any of the database design. This was going to make the implementation very inefficient.

But hold-on… WAIT! - We only had 3 systems ingested so far. We would also need to add in Office 365, Azure, 3 backup providers, active directory, spam filtering, security training, another RMM vendor, QuoteWerks and Vmware data into the warehouse!

Also - JOINS are slow - they are done EVERY time you query - and the MORE data, the More JOINs, and the slower the performance.

The complexity of adding all those to create a comprehensive view was staggering to even contemplate.

I was disillusioned. At about the same time, a colleague of mine had been telling me about graph databases.

I had read a bit about them and watched a few videos about it. So when he suggested we attend a conference to learn more, I was all-in and attended the Neo4j GraphConnect conference in New York City in 2018.

Hearing about real-life use cases and seeing Neo4j graph databases in action convinced me. I started writing Cypher code on my laptop on the plane ride home. The knowledge graph project really started in earnest after this.

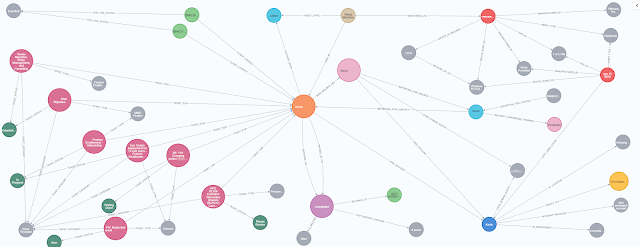

First - What is a knowledge graph? If you aren't familiar, and for our purposes here, it is a representation of the network of devices/events/concepts and users that also shows the relationship between these items. It is stored in a schema-less node/edge graph database.

If you have a need to integrate multiple structured, unstructured, and semi-structured sources of data from several sources, you need a knowledge graph.

Knowledge graphs are used as a solution to the problems presented by our data being siloed in the various systems we use to manage/support our clients in an MSP.

This allows you to ask complex relationship questions about your clients, their employees, tickets, charges, contracts and opportunities. From there I use this to facilitate automation, validation workflows and other processes.

…Continued in "A knowledge graph for an MSP"